<!-- .slide: class="title" --> # Extending VQL and the Velociraptor API --- <!-- .slide: class="content small-font" --> ## Module overview * VQL is really a glue language - we rely on VQL plugins and functions to do all the heavy lifting. * To take full advantage of the power of VQL, we need to be able to easily extend its functionality. * This module illustrates how VQL can be extended by including powershell scripts, external binaries and extending VQL in Golang. * For the ultimate level of control and automation, the Velociraptor API can be used to interface directly to the Velociraptor server utilizing many supported languages (like Java, C++, C#, Python).

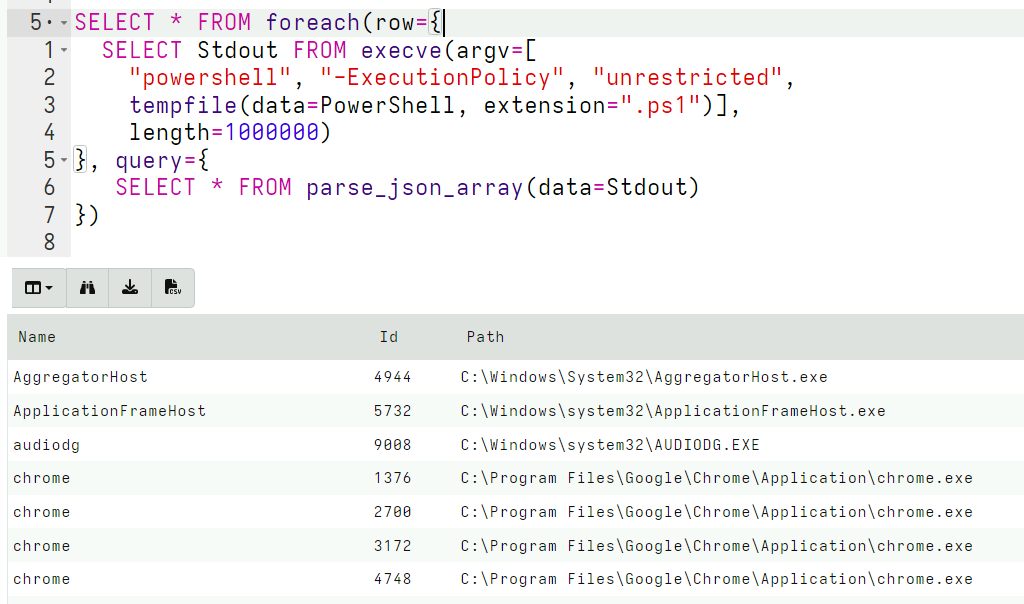

<!-- .slide: class="content " --> ## Extending VQL - Artifacts * The most obvious tool for extending VQL is simply writing additional artifacts. We have seen this done extensively in previous modules * Artifacts serve to encapsulate VQL queries: * Allows us to reuse a complex VQL query without worrying too much about the implementation. * Allows the implementation to evolve with time - perhaps offering additional functionality or better algorithms * Ultimately we are limited with the basic capabilities of the VQL engine. --- <!-- .slide: class="content small-font" --> ## Extending artifacts - PowerShell * Powershell is a powerful systems automation language mainly used on Windows systems where is comes built in and almost always available. * Many complex software products contain powershell modules around automation and system administration. * It does not make sense for Velociraptor to directly support complex software packages like Office365, Azure etc. * But it is critical to be able to recover forensically relevant data from these package * Therefore it makes sense to wrap powershell scripts in VQL artifacts. --- <!-- .slide: class="content " --> ## Exercise: PowerShell based pslist * This is not a PowerShell course! So for this example we will use the following very simple snippet of PowerShell ``` Get-Process | Select Name, Id, Path ``` <img src="powershell_pslist.png" class="title-inset" /> --- <!-- .slide: class="content " --> ## Exercise: PowerShell based pslist * The `execve()` plugin takes a list of args and builds a correctly escaped command line. * In many cases we dont need to encode the command line ```sql LET PowerShell = "Get-Process | Select Name, Id, Path" SELECT * FROM execve(argv=[ "powershell", "-ExecutionPolicy", "unrestricted", "-c", PowerShell]) ``` --- <!-- .slide: class="content " --> ## Alternative - encode scripts ```sql LET PowerShell = "Get-Process | Select Name, Id, Path" SELECT * FROM execve(argv=[ "powershell", "-ExecutionPolicy", "unrestricted", "-encodedCommand", base64encode(string=utf16_encode(string=PowerShell))]) ``` --- <!-- .slide: class="content " --> ## Alternative - tempfile scripts ```sql LET PowerShell = "Get-Process | Select Name, Id, Path" SELECT * FROM execve(argv=[ "powershell", "-ExecutionPolicy", "unrestricted", tempfile(data=PowerShell, extension=".ps1")]) ``` * Tempfiles will be automatically cleaned up at the end of the query --- <!-- .slide: class="content " --> ## Dealing with output * Using the execve() plugin we can see the output in Stdout * It would be better to be able to deal with structured output though. * We can use powershell's `ConvertTo-Json` to convert output to JSON and Velociraptor's `parse_json()` to obtain structured output. * This allows VQL or operate on the result set as if it was natively generated by a VQL plugin! --- <!-- .slide: class="content small-font" --> ## Parsing JSON output * PowerShell outputs a single JSON object which is an array all the rows. * We need to parse it in one operation - so we need to buffer all the Stdout into memory (set length=1000000). ```sql LET PowerShell = "Get-Process | Select Name, Id, Path | ConvertTo-Json" SELECT * FROM foreach(row={ SELECT Stdout FROM execve(argv=[ "powershell", "-ExecutionPolicy", "unrestricted", tempfile(data=PowerShell, extension=".ps1")], length=1000000) }, query={ SELECT * FROM parse_json_array(data=Stdout) }) ``` --- <!-- .slide: class="full_screen_diagram" --> ## Encoding powershell into JSON  --- <!-- .slide: class="content small-font" --> ## Reusing powershell artifacts * Since our powershell script is now encapsulated, we can use it inside other artifacts and plain VQL by calling `Artifact.Custom.Powershell.Pslist()`. * Users of this artifact dont care what the PowerShell Script is or what it does - we have encapsulation! <img src="custom_powershell_artifact.png" style="width: 60%" class="" />

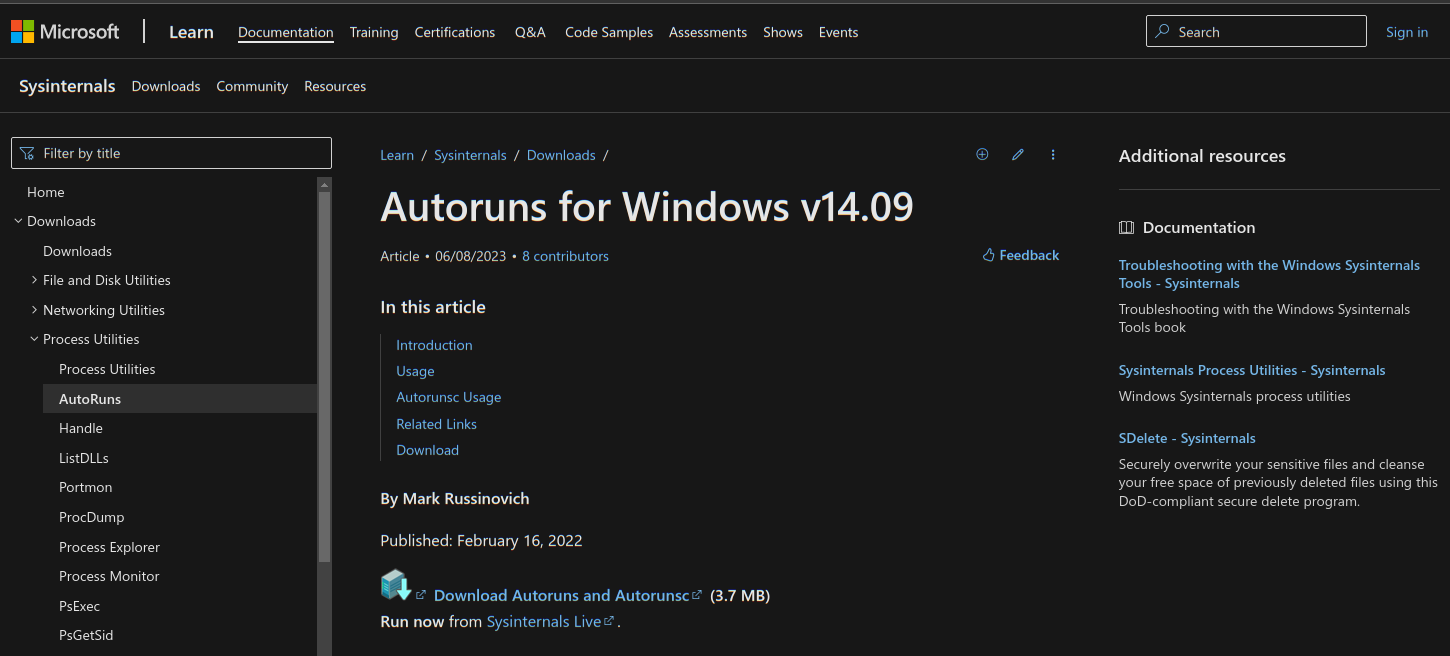

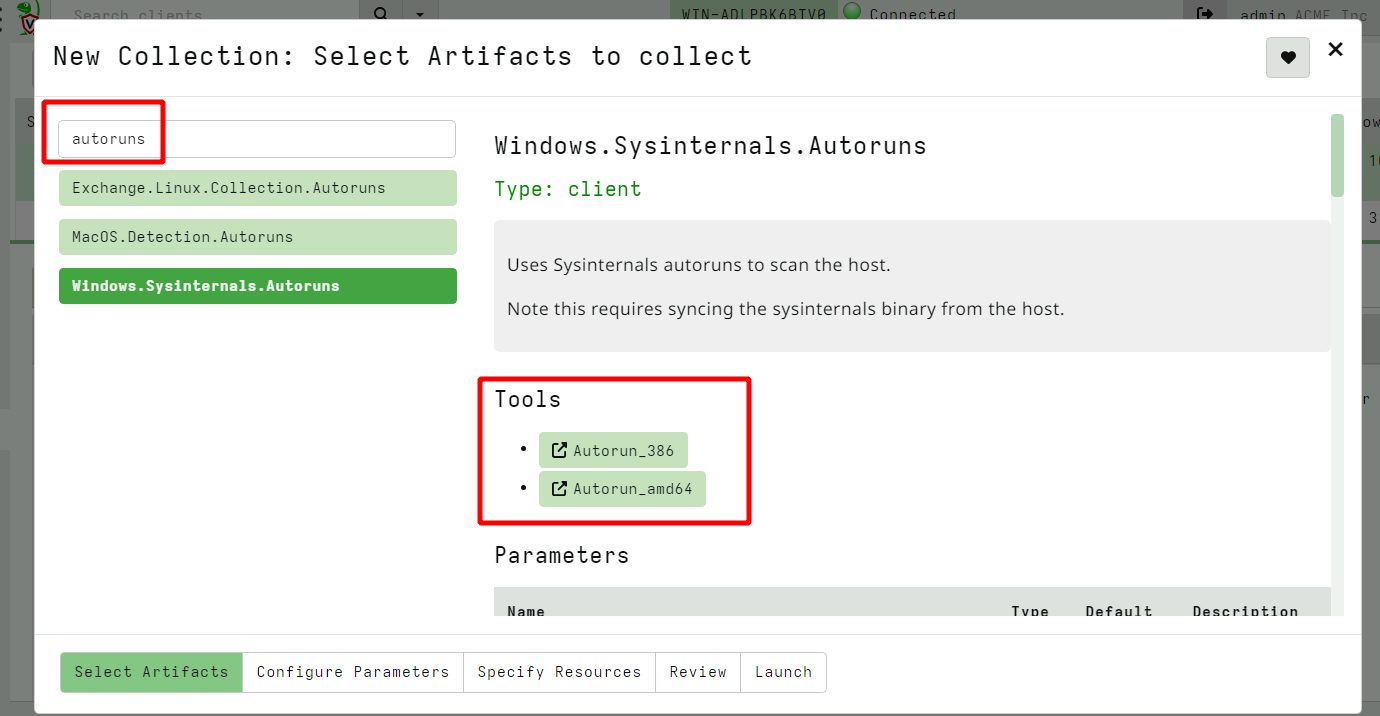

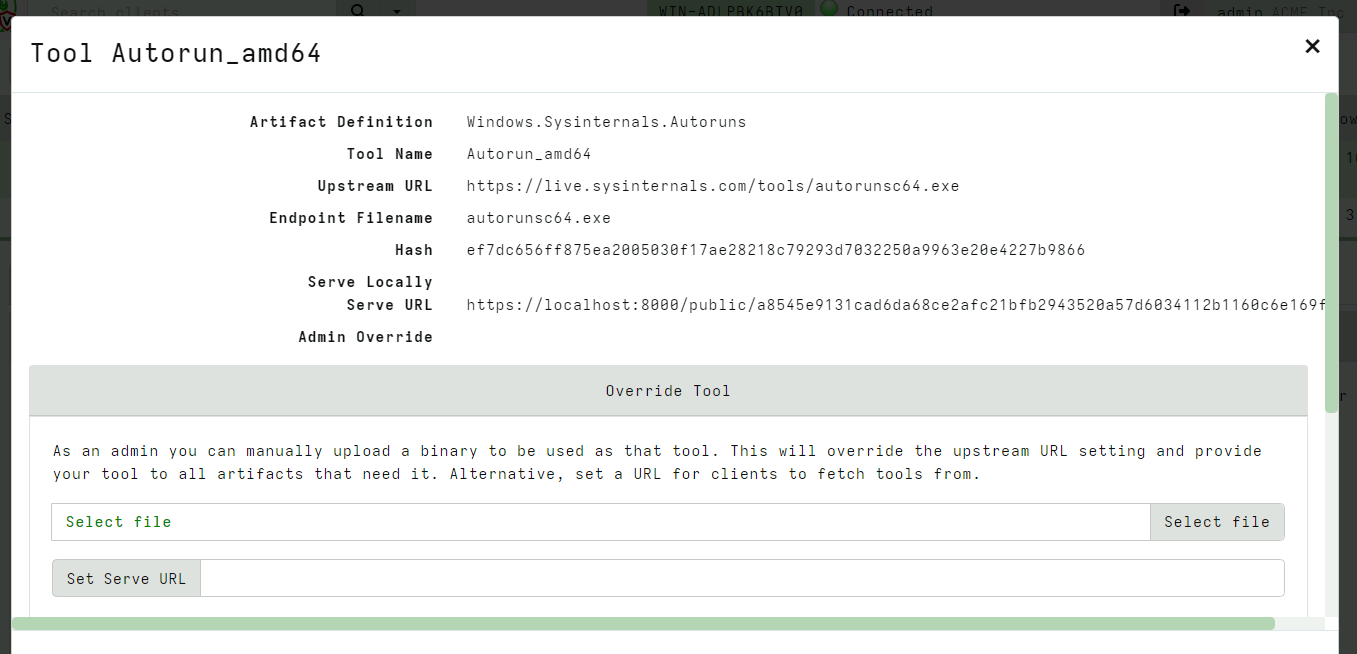

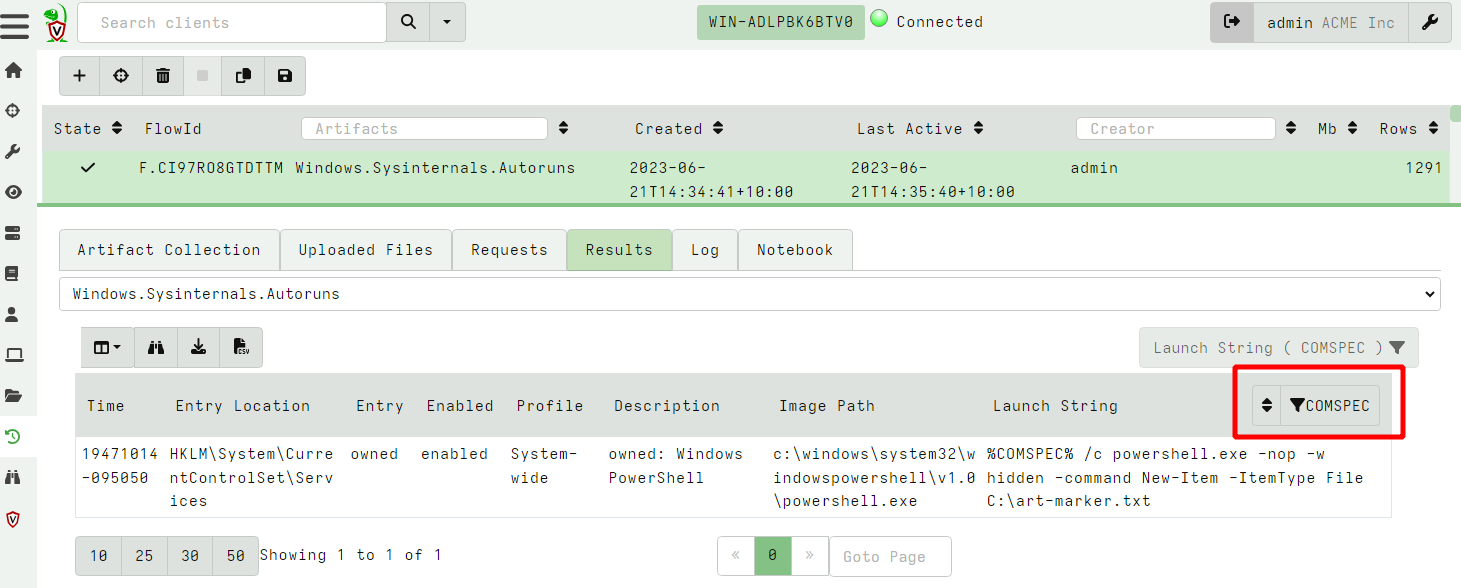

<!-- .slide: class="title " --> # Using external tools <img src="tools.png" class="title-inset" /> --- <!-- .slide: class="content " --> ## Why use external tools? * Velociraptor has a lot of built in functionality, but we can not cover all use cases! * Velociraptor can automatically use external tools: * Velociraptor will ensure the tool is delivered to the endpoint * The tool can be called from within VQL * VQL can parse the output of the tool - thereby presenting the output in a structured way * VQL can then further process the data --- <!-- .slide: class="content " --> ## Velociraptor Tools * Tools are cached locally on the endpoint and are only re-downloaded when hashes change. * Admin can control: * Where the tool is served from - Serve Locally or from Upstream * Admin can override the tool with their own * Artifact writers can specify * The usual download location for the tool. * The name of the tool * Velociraptor is essentially an orchestration agent --- <!-- .slide: class="content " --> ## Autoruns * Autoruns is a Sysinternals tool * Searches for many different types of persistence mechanisms * We could reimplement all its logic OR * We could just use the tool --- <!-- .slide: class="content small-font" --> ## Autoruns https://learn.microsoft.com/en-us/sysinternals/downloads/autoruns  --- <!-- .slide: class="content " --> ## Let's add a persistence Using powershell run the following: ``` powershell> sc.exe create owned binpath="%COMSPEC% /c powershell.exe -nop -w hidden -command New-Item -ItemType File C:\art-marker.txt" ``` https://github.com/redcanaryco/atomic-red-team/blob/master/atomics/T1569.002/T1569.002.yaml --- <!-- .slide: class="full_screen_diagram" --> ## Launching the Autoruns artifact  --- <!-- .slide: class="full_screen_diagram" --> ## Configuring the Autoruns tool  --- <!-- .slide: class="full_screen_diagram" --> ## Detect the malicious scheduled task  --- <!-- .slide: class="content " --> ## Artifact encapsulation * Use autoruns to specifically hunt for services that use %COMSPEC% * Artifact writers just reuse other artifacts without needing to worry about tools. ```sql name: Custom.Windows.Autoruns.Comspec sources: - query: | SELECT * FROM Artifact.Windows.Sysinternals.Autoruns() WHERE Category =~ "Services" AND `Launch String` =~ "COMSPEC" ``` --- <!-- .slide: class="content optional" data-background-color="antiquewhite" --> ## Exercise - Use Sysinternal DU * Write an artifact to implement Sysinternal's `du64.exe` to calculate storage used by directories recursively. --- <!-- .slide: class="content " --> ## Third party binaries summary * `Generic.Utils.FetchBinary` on the client side delivers files to the client on demand. * Automatically maintains a local cache of binaries. * Declaring a new Tool is easy * Admins can override tool behaviour * Same artifact can be used online and offline

<!-- .slide: class="title " --> # Automating the Velociraptor Server --- <!-- .slide: class="content " --> ## Server artifacts * Server automation is performed by exporting server administration functions as VQL plugins and functions * This allows the server to be controlled and automated using VQL queries * Server artifacts encapsulate VQL queries to performs certain actions * Server monitoring artifacts watch for events on the server and respond. --- <!-- .slide: class="content " --> ## Example: Client version distribution * 30 Day active client count grouped by version ``` SELECT count() AS Count, agent_information.version AS Version FROM clients() WHERE timestamp(epoch=last_seen_at) > now() - 60 * 60 * 24 * 30 GROUP BY Version ``` --- <!-- .slide: class="content " --> ## Server concepts * `Client`: A Velociraptor instance running on an endpoint. This is denoted by client_id and indexed in the client index. * `Flow`: A single artifact collection instance. Can contain multiple artifacts with many sources each uploading multiple files. * `Hunt`: A collection of flows from different clients. Hunt results consist of the results from all the hunt's flows --- <!-- .slide: class="content small-font" --> ## Exercise - label clients * Label all windows machines with a certain local username. 1. Launch a hunt to gather all usernames from all endpoints 2. Write VQL to label all the clients with user "mike" This can be used to label hosts based on any property of grouping that makes sense. Now we can focus our hunts on only these machines. --- <!-- .slide: class="content small-font" --> ## Exercise - label clients with event query * The previous method requires frequent hunts to update the labels - what if a new machine is provisioned? * Label all windows machines with a certain local username using an event query. --- <!-- .slide: class="content small-font" --> ## Exercise: Server management with VQL * We use the offline collector frequently to facilitate collections on systems we have no access to. * Write a server event query to automatically import new collections uploaded to: 1. A Windows Share 2. An S3 bucket. --- <!-- .slide: class="content optional" data-background-color="antiquewhite" --> ## Exercise: Automating hunting * Sometimes we want to run the same hunt periodically * Automate scheduling a hunt collecting Scheduled Tasks every day at midnight. --- <!-- .slide: class="content " --> ## Event Queries and Server Monitoring * We have previously seen that event queries can monitor for new events in real time * We can use this to monitor the server via the API using the `watch_monitoring()` VQL plugin. * The Velociraptor API is asynchronous. When running event queries the `gRPC` call will block and stream results in real time. --- <!-- .slide: class="content " --> ## Exercise - Watch for flow completions * We can watch for any flow completion events via the API * This allows our API program to respond whenever someone collects a certain artifact e.g. * Post process it and relay the results to another system). * Automatically collect another artifact after examining the collected data. ```sql SELECT * FROM watch_monitoring(artifact=’System.Flow.Completion’) ``` --- <!-- .slide: class="content " --> ## Server Event Artifacts * The Velociraptor server also offers a permanent Event Artifact service - this will run all event artifacts server side. * We can use this to refine and post process events only using artifacts. We can also react on client events in the server. --- <!-- .slide: class="content small-font" --> ## Exercise: Powershell encoded cmdline * Powershell may accept a script on the command line which is base64 encoded. This makes it harder to see what the script does, therefore many attackers launch powershell with this option * We would like to keep a log on the server with the decoded powershell scripts. * Our strategy will be: 1. Watch the client’s process execution logs as an event stream on the server. 2. Detect execution of powershell with encoded parameters 3. Decode the parameter and report the decoded script. 4. Use some regex to generate an escalation alert. --- <!-- .slide: class="content " --> ## Exercise: Powershell encoded cmdline * Generate an encoded powershell command using ``` powershell -encodedCommand ZABpAHIAIAAiAGMAOgBcAHAAcgBvAGcAcgBhAG0AIABmAGkAbABlAHMAIgAgAA== ``` Wait a few minutes for events to be delivered. --- <!-- .slide: class="content small-font" --> ## Alerting and escalation. * The `alert()` VQL function will generate an event on the `Server.Internal.Alerts` artifact. * Alerts are collected from **all** clients or from the server. * Alerts have a name and arbitrary key/value pairs. * Alerts are deduplicated on the source. * Your server can monitor that queue and issue an escalation to an external system: * Discord * Slack * Email --- <!-- .slide: class="content " --> ## Exercise: Escalate alerts to slack/discord. * Your instructor will share the API key for discord channel access. * Write an artifact that forwards escalations to the discord channel.

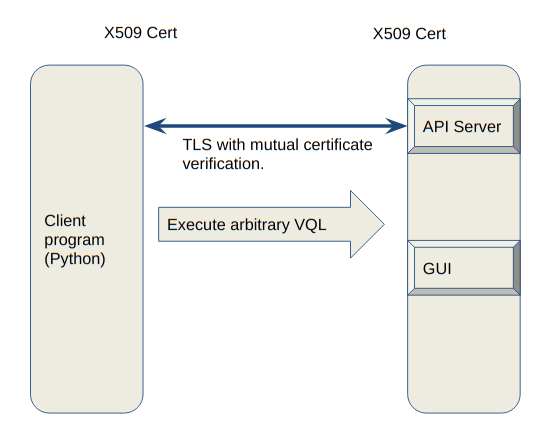

<!-- .slide: class="title " --> # The Velociraptor API ## Controlling the beast! --- <!-- .slide: class="content " --> ## Why an API? * Velociraptor needs to plug into a much wider ecosystem * Velociraptor can itself control other systems * Can already be done by the `execve()` and `http_client()` VQL plugins. * Velociraptor can be controlled by external tools * Allows external tools to enrich and automate Velociraptor * This is what the API is for! --- <!-- .slide: class="full_screen_diagram" --> ### Velociraptor API Server overview  --- <!-- .slide: class="content " --> ## Velociraptor API Server overview * TLS authentication occurs through pinned certificates - both client and server are mutually authenticated and must have certificates issued by Velociraptor's trusted CA. * Execute arbitrary VQL --- <!-- .slide: class="content " --> ## The Velociraptor API * The API is extremely powerful so it must be protected! * The point of an API is to allow a client program (written in any language) to interact with Velociraptor. * The server mints a certificate for the client program to use. This allows it to authenticate and establish a TLS connection with the API server. * By default the API server only listens on 127.0.0.1 - you need to reconfigure it to open it up. --- <!-- .slide: class="content " --> ## Create a client API certificate ``` velociraptor --config server.config.yaml --config server.config.yaml config api_client --name Mike --role administrator api_client.yaml ``` * Update the API connection string if needed. <img src="api_connection_string.png" class="inset"> --- <!-- .slide: class="content " --> ## Grant access to API key * The API key represents a user so you can manage access through the normal user management GUI * To be able to call into the API the user needs the `api` role. * Access to push events to an artifact queue: * Allows an API client to publish an event to one of the event queues. ``` velociraptor --config /etc/velociraptor/server.config.yaml acl grant Mike '{"publish_queues": ["EventArtifact1", "EventArtifact2"]}' ``` --- <!-- .slide: class="content " --> ## Export access to your API * Normally Velociraptor is listening on the loopback interface only * If you want to use the API from external machines, enable binding to all interfaces ```yaml API: hostname: 192.168.1.11 bind_address: 0.0.0.0 bind_port: 8001 bind_scheme: tcp pinned_gw_name: GRPC_GW ``` --- <!-- .slide: class="content small-font" --> ## Using the API to connect to the server. * The velociraptor binary can use the API directly to connect to a remote server: ``` velociraptor --api_config api_client.yaml query "SELECT * FROM info()" ``` * Using Python for example ``` pip install pyvelociraptor pyvelociraptor --config api_client.yaml "SELECT * FROM info()" ``` --- <!-- .slide: class="content small-font" --> ## Schedule an artifact collection * You can use the API to schedule an artifact collection ```sql LET collection <= collect_client( client_id='C.cdbd59efbda14627', artifacts='Generic.Client.Info', args=dict()) ``` * This just schedules the collection - remember the client may be offline for an indefinitely long time! When the client completes the collection results will be available. --- <!-- .slide: class="content " --> ## Waiting for the results * When a collection is done, the server will deliver an event to the `System.Flow.Completion` event artifact * You can watch this to be notified of flows completing. ``` SELECT * FROM watch_monitoring(artifact='System.Flow.Completion') WHERE FlowId = collection.flow_id LIMIT 1 ``` * This query will block until the collection is done! This could take a long time! --- <!-- .slide: class="content " --> ## Reading the results * You can use the `source()` plugin to read the results from the collection. ``` SELECT * FROM source(client_id=collection.ClientId, flow_id=collection.flow_id, artifact='Generic.Client.Info/BasicInformation') ``` * You must specify a single artifact/source to read at a time with the `source()` plugin. --- <!-- .slide: class="content " --> ## Exercise: Put it all together * Write VQL to call via the API to collect an artifact from an endpoint and read all the results in one query. * Encapsulate in a reusable artifact. * Call it from the API.

<!-- .slide: class="content " --> ## Review And Summary * Velociraptor is essentially a collector of data * Velociraptor has a number of ways to integrate and be controlled by other systems * VQL provide `execve()` allowing Velociraptor to invoke other programs, and parse their output seamlessly. * On the server VQL exposes server management functions allowing automating the server with artifacts. --- <!-- .slide: class="content " --> ## Review And Summary * The Velociraptor server exposes VQL via a streaming API - allowing external programs to Listen for events on the server * Command the server to collect and respond * Enrich and filter data on the server for better triaging and response.